Paul GavrikovI am an independent researcher specializing in multi-modal vision systems. Previously, I was a postdoctoral researcher at the Tübingen AI Center working with Hilde Kuehne, and at the Goethe University Frankfurt. I received my PhD from the University of Mannheim, under the supervision of Janis Keuper. I also hold a BSc and MSc in Computer Science from the University of Freiburg.

I'm always happy to connect with students and collaborators. If my research sparks an idea or a question, I'd love to hear from you! Email / X / Bluesky / GitHub / Google Scholar / LinkedIn / Medium / dblp |

|

News

|

ResearchMy current research zooms-in on questions around multi-modal foundation models, before that I explored generalization and robustness in image classifiers. A long time ago (but not in a galaxy far, far away), I worked on Bluetooth Low Energy. Highlights. |

|

VisualOverload: Visual Understanding of VLMs in Really Dense ScenesPaul Gavrikov, Wei Lin, M. Jehanzeb Mirza, Soumya Jahagirdar, Muhammad Huzaifa, Sivan Doveh, Serena Yeung-Levy, James Glass, Hilde Kuehne Under Review arXiv / benchmark / code We introduce VisualOverload, a fresh VQA benchmark that focuses on detailed, knowledge-free vision tasks in densely populated art images. The results indicate a significant performance gap, with frontier models struggling to achieve high accuracy, suggesting that basic visual understanding in complex scenes remains unsolved. |

|

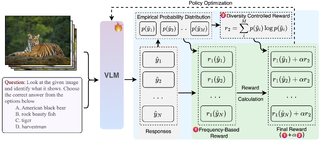

TTRV: Test-Time Reinforcement Learning for Vision Language ModelsAkshit Singh, Shyam Marjit, Wei Lin, Paul Gavrikov, Serena Yeung-Levy, Hilde Kuehne, Rogerio Feris, Sivan Doveh, James Glass, M. Jehanzeb Mirza Under Review arXiv / code We introduce TTRV, a framework that allows Vision-Language Models (VLMs) to adapt and improve during inference — without any labeled data. By combining frequency-based rewards and diversity control, TTRV uses reinforcement learning directly at test time to make models more accurate and confident. |

|

Can We Talk Models Into Seeing the World Differently?Paul Gavrikov, Jovita Lukasik, Steffen Jung, Robert Geirhos, M. Jehanzeb Mirza, Margret Keuper, Janis Keuper ICLR, 2025 (initially at CVPR Workshops, 2024) arXiv / paper / code We investigate the propagation of visual biases in LLM-powered Vision-Language Models (VLMs). Through the lense of the texture/shape bias, we find that not only does the multi-modal fusion with LLMs impact the inherited bias from a vision encoder, but this also allows us to simply prompt the model to change the bias, or said casually: we can talk the model into seeing the world differently. |

|

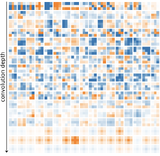

How Do Training Methods Influence the Utilization of Vision Models?Paul Gavrikov, Shashank Agnihotri, Margret Keuper, Janis Keuper NeurIPS Workshops, 2024 arXiv / code In this preliminary study, we analyze the influence of training methods on the utilization of layers in ImageNet classification models while keeping training data and the architecture fixed. |

|

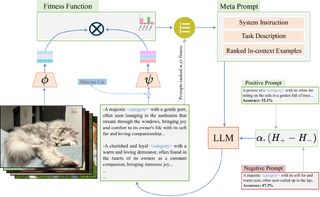

GLOV: Guided Large Language Models as Implicit Optimizers for Vision Language ModelsM. Jehanzeb Mirza, Mengjie Zhao, Zhuoyuan Mao, Sivan Doveh, Wei Lin, Paul Gavrikov, Michael Dorkenwald, Shiqi Yang, Saurav Jha, Hiromi Wakaki, Yuki Mitsufuji, Horst Possegger, Rogerio Feris, Leonid Karlinsky, James Glass TMLR, 2025 arXiv / paper / code We introduce GLOV, a method that enables LLMs to optimize VLMs by generating and refining prompts for downstream vision tasks while being guided by previously explored prompts, achieving significant performance improvements across various benchmarks. |

|

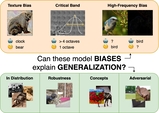

Can Biases in ImageNet Models Explain Generalization?Paul Gavrikov, Janis Keuper CVPR, 2024 paper / arXiv / code We investigate the generalization capabilities of neural networks from the perspective of shape bias, spectral biases, and the critical band. Our results show that even when we fix the architecture these indicators are not reliable predictors of generalization performance. |

|

Improving Native CNN Robustness with Filter Frequency RegularizationJovita Lukasik*, Paul Gavrikov*, Janis Keuper, Margret Keuper TMLR, 2023 paper / code We propose controlling the frequency content of learned convolution filters in vision CNNs. This results in model that are natively more robust to adversarial robustness and corruptions, generalize better, and are generally more aligned with human vision. |

|

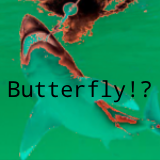

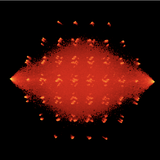

Don't Look into the Sun: Adversarial Solarization Attacks on Image ClassifiersPaul Gavrikov, Janis Keuper Preprint arXiv / code We present a new adversarial attack based on image solarization. Despite being conceptually simple, the attack is effective, cheap to compute, and does not risk destroying the global structure of natural images. It also serves as a universal black-box attack against models trained with the legacy ImageNet training recipe. |

|

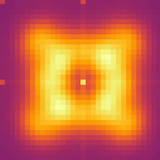

On the Interplay of Convolutional Padding and Adversarial RobustnessPaul Gavrikov, Janis Keuper ICCV Workshops, 2023 paper / arXiv Our study examines the relationship between padding in Convolutional Neural Networks (CNNs) and vulnerabilities to adversarial attacks. We show that adversarial attacks result in different perturbation anomalies at image boundaries depending on the padding mode and discuss which mode is the best for adversarial settings. (Spoiler: it's zero padding) |

|

An Extended Study of Human-like Behavior under Adversarial TrainingPaul Gavrikov, Janis Keuper, Margret Keuper CVPR Workshops, 2023 paper / code / arXiv Our analysis reveals how different forms of adversarial training (AT) affect human-like behavior of CNNs and Transformers. Additionally, we propose a hypothesis of why AT increases shape bias and in which scenarios it can improve out-of-distribution generalization from a frequency perspective. |

|

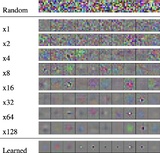

The Power of Linear Combinations: Learning with Random ConvolutionsPaul Gavrikov, Janis Keuper In Review arXiv We question if learning spatial convolution filters is necessary. Even with default i.i.d. random inits, we can achieve 75.66% validation acc with ResNet-50 on ImageNet without ever learning any spatial convolution weight. Additionally, random filters can be more robust against adversarial attacks than learned filters. |

|

Does Medical Imaging learn different Convolution Filters?Paul Gavrikov, Janis Keuper NeurIPS Workshops, 2022 arXiv Earlier, we showed that, on average, convolution filters only show minor drifts when comparing various dimensions, including the learned task, image domain, or dataset. However, medical imaging models showed significant outliers through spiky filter distributions. We revisit this observation and perform an in-depth analysis of medical imaging models. |

|

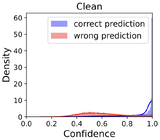

Robust Models are less Over-ConfidentJulia Grabinski, Paul Gavrikov, Janis Keuper, Margret Keuper NeurIPS, 2022 (initially at ICML Workshops, 2022) paper / arXiv / code Adversarial Training (AT) leads to models that are significantly less overconfident with their decisions, even on clean data, than non-robust models. The analysis shows that not only AT, but also the models' building blocks (like activation functions and pooling) have a strong influence on the models' prediction confidences. |

|

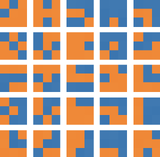

Adversarial Robustness through the Lens of Convolutional FiltersPaul Gavrikov, Janis Keuper CVPR Workshops, 2022 paper / arXiv / code We investigate 3x3 convolution filters that form in adversarially-trained robust models and find that these models form more diverse, less sparse, and more orthogonal filters than their normal counterparts. The largest differences are found in the deepest layers and the very first convolution layer which forms highly distinct thresholding filters. |

|

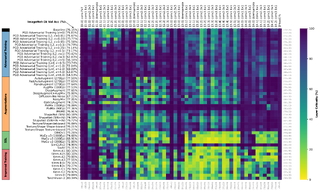

CNN Filter DB: An Empirical Investigation of Trained Convolutional FiltersPaul Gavrikov, Janis Keuper CVPR, 2022 (Oral Presentation) paper / arXiv / code We collected and publicly provided a dataset with over 1.4 billion 3x3 convolution filters from hundreds of trained CNNs. Our observations show that - surprisingly - models learn highly similar filter pattern distributions independent of task and dataset, but differ by model architecture. We also propose methods to measure the quality of filters to detect overparameterization or underfitting and show that many publicly available models suffer from "degenerated" filters. |

|

An Empirical Investigation of Model-to-Model Distribution Shifts in Trained Convolutional FiltersPaul Gavrikov, Janis Keuper NeurIPS Workshops, 2021 paper / arXiv / code This paper looks at distribution shifts in filter weights used for various computer vision tasks. We collected data from hundreds of trained CNNs and analyzed the distribution shifts along different axes of meta-parameters. We found interesting distribution shifts between trained filters, and argue that this is a valuable source for further investigation into understanding the impact of shifts in the input data on the generalization abilities of CNN models. |

|

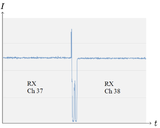

A Low Power and Low Latency Scan Algorithm for Bluetooth Low Energy Radios with Energy Detection MechanismsPaul Gavrikov, Matthias Lai, Thomas Wendt APWiMob, 2019 (Best Paper Award) paper A new, more efficient algorithm for Bluetooth scanning is presented that uses less power and can scale with incoming network traffic. The algorithm does not require any changes to advertisers, so it is compatible with existing devices, and performance evaluation shows that it is more efficient than existing methods. |

|

Exploring non-idealities in real device implementations of Bluetooth MeshPaul Gavrikov, Matthias Lai, Thomas Wendt Wireless Telecommunications Symposium 2019 (presentation) and IJITN paper We compare the performance of Bluetooth Mesh implementations on real chipsets against the ideal implementation of the specification. It is shown that there are non-idealities in the underlying Bluetooth Low Energy specification in real chipsets and in the implementation of Mesh, which introduces an unruly transmission as well as reception behavior. These effects lead to an impact on transmission rate, reception rate, latency, as well as a more significant impact on the average power consumption. |

|

Using Bluetooth Low Energy to trigger a robust ultra-low power FSK wake-up receiverPaul Gavrikov, Pascal E. Verboket, Tolgay Ungan, Markus Müller, Matthias Lai, Christian Schindelhauer, Leonhard M. Reindl, Thomas Wendt ICECS, 2018 paper We discuss a new approach to using BLE packets to create an FSK-like addressable wake-up packet. A wake-up receiver system was developed from off-the-shelf components to detect these packets. This system is more robust than traditional OOK wake-up systems and has a sensitivity of -47.8 dBm at a power consumption of 18.5 uW during passive listening. The system has a latency of 31.8 ms with a symbol rate of 1437 Baud. |

Academic Career

|

|

Design and source code from Jon Barron's website |